The culture of programmers and other technologists is plagued by toxic elitism. One of the manifestations of this elitism is an unrelenting hostility toward so-called “non-technical” people (a distinction that’s also ready for retirement), beginners, and ultimately anyone asking for help. If you’re unconvinced, please spend a few minutes browsing the popular¹ question-and-answer site, Stack Overflow² (just make sure you prepare yourself emotionally beforehand).

Category: Accessibility

There are 158 posts filed in Accessibility (this is page 14 of 27).

The title of this post is not broad enough. Avoid emoji as any identifier, whether as strings in your script, IDs on your elements, classes for your CSS, and so on. As soon as you start using emoji, you are blocking some users from being able to understand or use your code. It doesn’t matter how popular the technique becomes (or doesn’t).

If you want to read or type in Hebrew or any other non-western language on a notetaker, be prepared to turn off your speech and essentially trick the braille display if it exists into accepting Hebrew braille. Turn off the speech because otherwise you can’t think in Hebrew while typing since every notetaker embeds Eloquence, and Eloquence absolutely does not speak Hebrew. Want to interact with Hebrew text on your phone and get braille feedback? Hahahahahahahaha no because even if VoiceOver and Talkback support Hebrew, (VO supports Hebrew and will smoothly transition between it and other languages), braille displays don’t. And braille displays absolutely do not support unicode to any extent.

More broadly, regarding non-western languages and code, I don’t think we should continue to ask developers who are not native English speakers and who also do not speak a language which is expressed in Latin characters to make sure their English is good enough so they can code. That seems like an all too arbitrary requirement to me. So it’s not that I’m disagreeing with Adrian, because he’s acknowledging the reality on the ground, and practically speaking his advice is what we need to follow. I just think the whole situation of coding in general and assistive technology in particular being as incredibly ethnocentric as they are is pathetically stupid.

What can we learn about the Mueller Report from the PDF file released by the Department of Justice on April 18, 2019? This article offers two things: a brief, high-level technical assessment of the document, and a discussion on why everyone assumes it would be delivered as a PDF file – and would have been shocked otherwise.

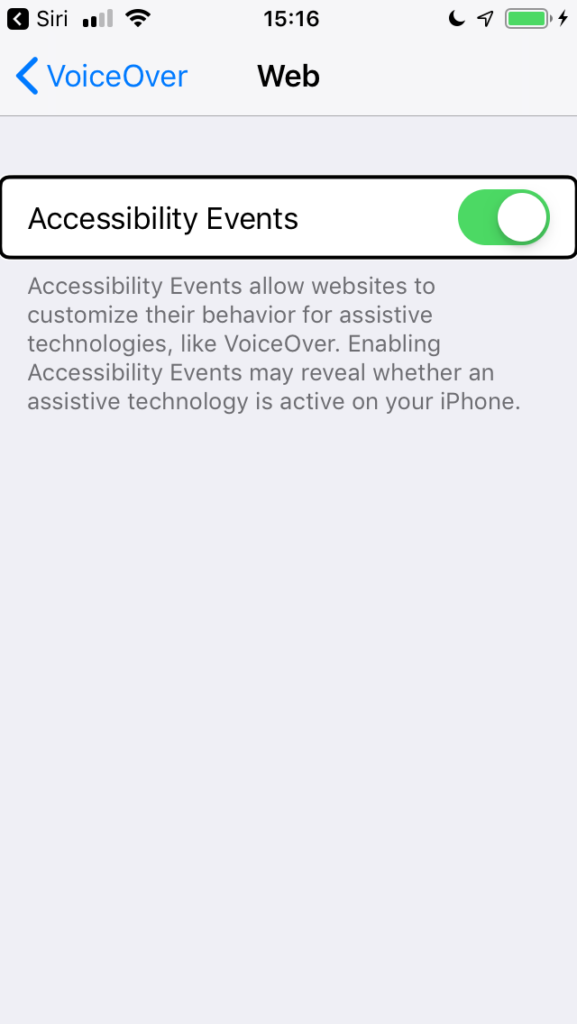

Apple is now apparently saying that its Accessibility Events feature, (you know, the one that “may reveal whether an assistive technology is active on your iPhone”), is not enabled by default. Like hell it’s not enabled by default. It sure was enabled when I installed the iOS 12.2 update last weekend on my iPhone 8+). I specifically went in to general/accessibility/VoiceOver to check, and had to turn the feature off. This note includes a screenshot of my just-updated iPhone 5S, and as sure as the sun is shining, the accessibility events feature was turned on. I have a severe allergy to BS, and Apple doesn’t get to bypass the BSometer just because it has a history of caring a lot about accessibility. Websites should be designed and developed from the beginning with accessibility in mind. The guidelines are already out there and have been out there and freely available, complete with extensive documentation so that they can be understood, for over twenty years. There’s a metric ton of freely available information from the accessibility community of practice on every aspect of those guidelines, all over the internet, for basically as long as the guidelines themselves have existed. Assistive technology tracking has been covered already by this community of practice, and we’re probably all tired of it. For Apple to lie about something as simple as whether the feature is on by default indicates at least some corporate squeamishness around implementing it in the first place, and the best thing they could do at this point is to remove it.

No seriously, you really do need to caption your videos, the Harvard and MIT edition

Both MIT and Harvard have argued in court filings that they should not be required to provide closed captions for every video they create or host on their websites. After the institutions’ first attempt to dismiss the cases was denied, there was a yearlong attempt to reach a settlement out of court. When that attempt failed, the universities again moved to dismiss the cases.

Judge Katherine A. Robertson of the U.S. District Court of Massachusetts largely rejected the universities’ second attempt to dismiss the cases. On March 28, Robertson denied the institutions’ pleas for the exclusion of their websites from Title III of the Americans With Disabilities Act and Section 504 of the Rehabilitation Act. Title III of the ADA prohibits disability discrimination by “places of public accommodation.” Section 504 of the Rehabilitation Act prohibits discrimination on the basis of disability in programs that receive federal funding.

My eyes are stuck in the rolled position, and this time I think it’s permanent. I may be missing something, but the only exception for captions in WCAG SC 1.2.2 or its “Understanding” documentation is for content which is a transcript of the video or audio. I’m not deaf, and I’m getting tired of the excuses for lack of captions or transcriptions. How many times does some variation of “it’s not popular enough” or “I can’t afford it” or “it’s too hard” have to be tossed out? People and organizations will spend hundreds or even thousands of dollars on audio or video equipment, only to then not caption or transcribe the content they create. And Harvard and MIT both are schools which could afford to transcribe their content, so hearing from them that they care about accessibility as long as it means they don’t have to caption all of their content is especially galling. I suppose if we’re talking about a podcast that’s just starting out, or a one-man shop, I could see why you might not come out of the gate with captions/transcripts. But even that only works to a point. If you’re pouring hundreds of dollars into a good headset or other higher-end audio equipment, then at some point you should be making arrangements for captions or transcriptions. It goes without saying that Harvard and MIT aren’t in the one-man-shop category.

By using custom, accessible, focus states, we can make websites much easier to use for people that navigate using the keyboard.